NZ researchers propose framework to bridge gap between AI principles and governance

The country’s former chief science advisor, Sir Peter Gluckman, is leading the development of a framework to evaluate artificial intelligence, but which could also be applied to quantum computing and even gene editing.

Sir Peter, the director of Koi Tū and Hema Sridhar, Koi Tū strategic adviser for technological futures, are the lead authors of a discussion paper to inform the multiple global and national discussions taking place related to AI.

While UNESCO, the OECD, the European Commission and numerous other international bodies have released principles for the responsible use of AI, the researchers point out that there is “a large ontological gap between such principles and a governance or regulatory framework”.

They aren’t proposing AI-specific regulation. Instead, they’ve drafted a framework that considers the individual, societal, economic and geopolitical impacts of a new technology, which could inform the governance of AI and other emerging technologies.

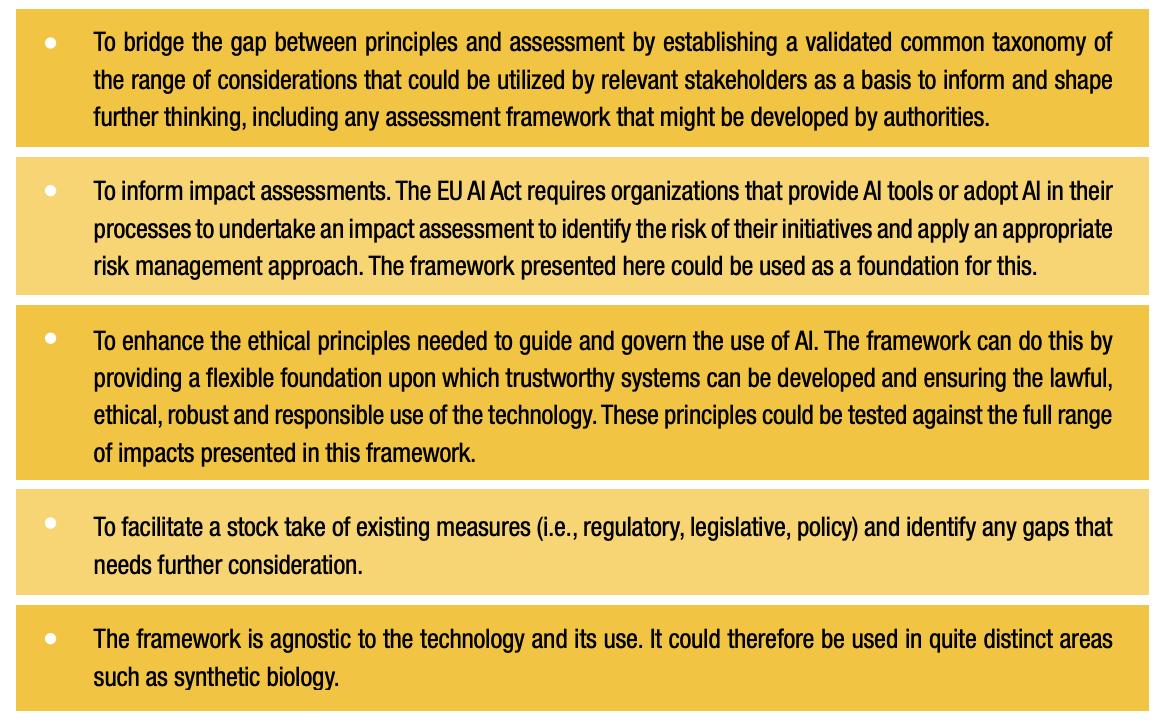

How the proposed ISC framework could be used.

“AI is rapidly pervasive; some properties may only become apparent after release, and the technology could have both malevolent and benevolent applications. Important values dimensions will influence how any use is perceived. Furthermore, there may be geo-strategic interests at play,” the International Science Council discussion paper penned by the researchers points out. Sir Peter Gluckman is the current president of the ISC, a non-profit that represents the world's national scientific academies.

The paper was released ahead of the AI Safety Summit being held in the UK next week. Sridhar says the framework, which is still in its early stages of development, could act like a checklist for policymakers, decision-makers, and the private sector alike.

“We’re not saying technology is good or bad. We’re saying technologies are going to be used. It’s about how you make it most likely that societies will benefit and not be harmed by the technology,” she says.

“It gives a layer of objectiveness to an area that has traditionally been quite subjective. And in many cases, we’re seeing the capability of the technology is evolving. So the framework gives an objective way in which you can say, here’s what we assessed as of today, and in a year or two you can review it and see if these risks have manifested, or if they haven’t. And then make sure that our measures are appropriate for what the technology actually is,” she says.

Negative uses redefined

Sir Peter said the definition of what a negative use of technology had changed, particularly with the rise of fast-changing digital technologies.

“Negative used to be simply that it would produce a bomb or a weapon and kill people. Negative now means what it will do to society, what it will do to mental health, and what it will mean for society. So the raft of downsides has changed,” he says.

The ISC will look to form a working group to develop the analytical framework, based on feedback on the discussion paper.