From Anthropic to Titan - AWS is going big on generative AI

If Microsoft and OpenAI dominated much of the discussion around generative AI as they teamed up on the technology, AWS is ramping up its offerings too.

AWS this week announced an investment of up to US$4 billion in generative AI company Anthropic in which it will take a minority stake.

Anthropic will expand its use of the AWS cloud computing platform to run its large language models, use the computer chips that Amazon has developed, and make its AI models available to AWS customers long-term.

The big public cloud platforms are best positioned to play a dominant role in the generative AI space given their vast computing capacity, which is key to developing and improving the large language models underpinning services like ChatGPT, Midjourney and meta’s open-source Llama 2.

Bedrock's foundational model line-up

Amazon has its own AI models, including Titan Text, a generative large language model (LLM) for tasks such as summarisation, text generation, classification, open-ended Q&A, and information extraction.

But Rada Stanic, AWS chief technologist based in Sydney, told Tech Blog that the public cloud company had a strategy of making a wide range of third-party foundational models available to customers via its platform.

“We at AWS made a decision that the core part of our strategy will be to keep the options open for the customers so that they can choose from what exists today and what will exist into the future,” she says.

AWS Bedrock is an on-demand service offering access to Titan, as well as Anthropic’s Claude 2 and other LLMs from AI21Labs, Cohere and Stability.ai

“Let us run the model on our infrastructure that will manage and you focus on what you want to get out of that, choosing the right model, and making an API call,” Stanic says.

Agents for Bedrock is a new AWS feature that Stanic says can speed up the release of generative AI applications and handle more complex tasks than the current generation of chatbots, such as handling flight bookings, or processing an insurance claim.

With the debut next year of an AWS data centre region in Auckland, Stanic said a priority will be making sure data centres in the region are equipped with the hardware to run generative AI services.

The key advantage for New Zealand customers of having access to the Auckland data centre region was to improve performance of applications that require “low latency for mission-critical applications” Stanic says.

Go local for low latency

With a long tenure in the telecoms sector at Cisco and Alcatel before joining AWS around five years ago, Stanic said communications applications were a key use case taking advantage of the lower latency being closer to a hyperscale data centre could provide.

“The software that handles the whole control plane for establishing voice video calls, that's latency-sensitive, because you really have to quickly, in the order of milliseconds, complete the control plane establishment,” Stanic says.

“In financial services, there will be extremely latency-sensitive payments transactions. Again, that's now a great candidate to put it in an Auckland region, as opposed to the Sydney region,” she adds.

But she didn’t see a wholesale shift of data and applications to the Auckland region by New Zealand-based AWS customers, once the new data centre region was active.

“If you were able to run your business in a particular way, and it's worked for you by running things in the Sydney region or wherever it may be, then you wouldn't just move it for the sake of it, because there’s a cost,” Stanic says.

Telcos pursuing GenAI chatbots, software development

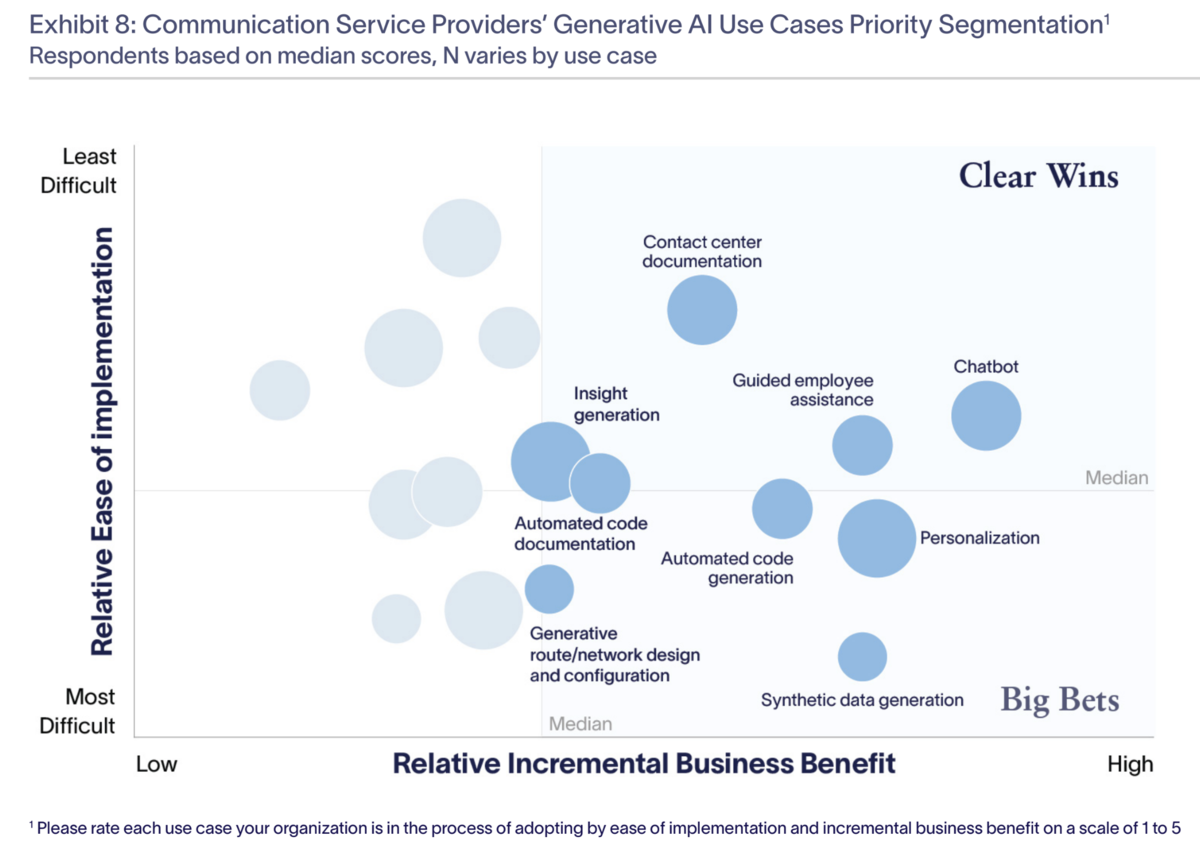

AWS this week released research showing how generative AI is being taken up in the telecoms industry.

A survey undertaken for AWS by Altman Solon, a global strategy consulting firm, gathered feedback from around 100 telecoms and media executives across the US, Western Europe and Asia Pacific. It found that 19% of respondents’ organisations had already implemented generative AI or were in the process of doing so. That figure is expected to rise to 34% within the next year.

Ishwar Parulkar, AWS chief technology officer, telecom and edge cloud, says the research shows that intelligent chatbots powered by generative AI were the technology most commonly being pursued by telecoms operators in a bid to improve customer service.

“An interface that is based on natural language prompts and can generate responses in a more intelligent way is the top of the list and by far, I mean it's almost every telco is working on this,” he told Tech Blog.

Source: Altman Solon

Generative AI uptake among communications companies in Asia Pacific was 16%, with uptake “constrained by localization challenges, such as language”.

You can download the AWS Altman Solon whitepaper Telecommunications Generative AI Study here.